Dead Internet Theory: reality or conspiracy?

There is a theory that suggests that the Internet, once a realm of human creativity and freedom, is now actually a dead place, digitally rotting in its empty artificiality.

There is a theory that suggests that the Internet, once a realm of human creativity and freedom, is now actually a dead place, digitally rotting in its empty artificiality. According to this theory, most of the online content today is generated by algorithms and automated bots that interact with each other. This is the Dead Internet Theory.

According to it, millions of bots have been created and released online year after year with the aim of generating artificial content to manipulate human users by exploiting recommendation algorithms. At the top, governments and corporations would be the puppet masters of these armies of bots.

It is unclear when this theory was first hypothesized, although the earliest traces date back no more than ten years ago.

Despite speculation, it is undeniable that the Internet ecosystem is changing very rapidly, especially due to generative artificial intelligence and the myriad of connected smart devices that communicate with each other. More than a conspiracy theory, today the Dead Internet Theory risks becoming a descriptive model of the current state of affairs.

What is it all about

Before delving deeper, let's look at the key elements of the Dead Internet Theory:

Proliferation of bots and automated content: The theory claims that most of the content on the Internet, including social media posts, comments, and articles, is generated by bots and algorithms rather than humans.

Control by corporations and governments: Big Tech companies like Google and Facebook exert significant control over the content that appears online, promoting automated content to manipulate public opinion and maximize profit.

Decline of authenticity: According to the theory, there has been a significant decline in the amount of authentic content created by real users over the last decade.

Manipulation of information: The massive presence of bots, tirelessly generating artificial content, is used to manipulate algorithms (and thus the information people see), influence political and social opinions, and control the global narrative.

Scarcity of real data and interactions: Finally, the theory suggests that genuine data and interactions are increasingly difficult to find online, as the Internet is dominated by this artificial algorithmic "noise."

Put this way, it does not seem too far from reality, and there is even data supporting this thesis. For example, the Bad Bot Report by Imperva, a major cybersecurity company.

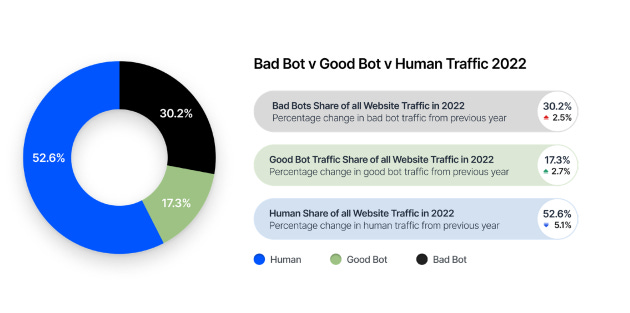

The goal of Imperva's monitoring is to understand the spread and effects of online bots, often associated with cyberattacks. According to the report, in 2022, more than half of Internet traffic was generated by bots.

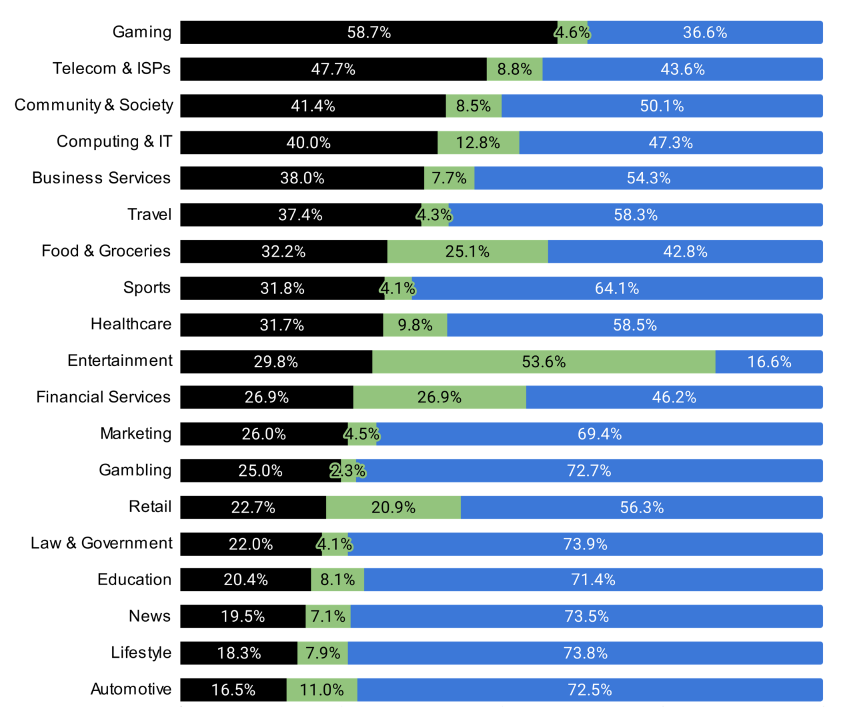

From the report, we can see that in some sectors, especially those more closely tied to digital content, human traffic is already lower than bot traffic.

The entertainment sector is particularly affected: a staggering 83% of traffic belongs to automated bots. Humans are left with a mere 16.6%. This figure is not surprising, considering that today there are numerous ways to automate the creation and management of accounts on YouTube, one of the most used platforms in the entertainment industry.

Even Elon Musk seems concerned. After acquiring X (formerly Twitter), he launched an ongoing battle against bots, leading to the introduction of account verification mechanisms to limit the phenomenon. Facebook is also on the front lines in combating bots—just think that in 2022, it removed 5.8 billion fake accounts.

The phenomenon is also of interest to lawmakers, particularly in Europe, where it is being used as a pretext to impose laws against disinformation and illegal content, as well as for the identification of human users.

Feedback loop and self-referentiality

Beyond the technical and legislative aspects, the proliferation of bots and automated content has significant implications for the very nature of the Internet and the way we interact with cyberspace.

Machine learning algorithms used to recommend online content (such as Instagram reels or the X homepage) constantly rely on historical data to decide which content to propose to users. This means that every output is always, in some way, an echo of the past.

Every decision is therefore shaped by previous decisions and existing data, reinforcing a cyclical connection to the past. This would not be a big problem in a scenario where algorithms make decisions based on human-generated data—as predictable as we are, humans always retain that spark of chaos and creativity that allows us to break and renew the feedback loop.

However, if we insert this mechanism into a context dominated by a prevalence of artificially generated content, produced by bots and algorithms feeding on synthetic data—that is, content created by other bots and algorithms—the situation changes.

The risk becomes evident: total self-referentiality. Models will continue to generate outputs based on inputs that, in turn, derive from previous algorithmic outputs, creating a degenerative recursive loop.

Internet would thus transform into a closed ecosystem: an archive of informational simulacra stuck in a state of quasi-past and quasi-present, where thought flattens into an echo without origin, serving only to manipulate users' perceptions.

Creativity or algorithmic optimization?

Now, if algorithms decide which content emerges, and bots and AI are used to produce content optimized for visibility and monetization, what remains for humans? Competing with machines, adapting to their logic to stay relevant. Creativity is no longer a free act, but a forced game of algorithmic optimization: winning means satisfying the algorithm better and faster than the competition, whether they are other humans or bots.

This is not science fiction—it is already reality. Influencers do not work for themselves but for the algorithm of their preferred platform. The creative process is reduced to a race for maximum conformity to the parameters of recommendation algorithms, which in turn are influenced by countless bots and other algorithms.

And it is not just about influencers: every creative industry is now infiltrated by this dynamic. At this point, is there really a difference between content entirely generated by a bot and content created by a human who bends and adapts to algorithmic logic? When humans become mere extensions of code, how much of their content remains authentically human?

Brain Dead Society

The spread of bots and artificially generated content online is just the beginning. What we see clearly in entertainment platforms is also seeping into the real world.

LLMs like ChatGPT can now generate highly realistic and specialized textual content, such as administrative documents, legal opinions, even court rulings. This will inevitably lead to the emergence of recursive degenerative phenomena in public administration and the legal system, establishing feedback loops where future acts and rulings will be based on past algorithmic inputs—which were, in turn, influenced by other automated content.

What happens when legal precedents are no longer the result of the creativity of lawyers and judges, but simply alignments with past algorithmic decisions suspended in quasi-past and quasi-present?

What happens is that human society slows down, eventually coming to a halt in a dimension frozen between present and past, where social conformity becomes the norm. Any revolution—creative, legal, or administrative—must first pass through the filter of self-referential algorithms.

The question remains: is the Dead Internet Theory really a conspiracy theory, or has it already become a reality?

If you liked this article, please consider sharing it and help me grow the community!